Source: Zero Hedge

Dozens of Facebook content moderators from around the world are calling for the company to put an end to ‘overly restrictive nondisclosure agreements’ (NDAs) which discourage workers from speaking out about horrific working conditions, according to The Verge.

“Despite the company’s best efforts to keep us quiet, we write to demand the company’s culture of fear and excessive secrecy ends today,” the group of at least 60 moderators wrote in a letter to Mark Zuckerberg, Sheryl Sandberg and the CEOs of contracting companies Covalen and Accenture. “No NDA can lawfully prevent us from speaking out about our working conditions.”

The news comes amid escalating tension between the company and its contract content moderators in Ireland. In May, a moderator named Isabella Plunkett testified before a parliamentary committee to try to push for legislative change.

“The content that is moderated is awful,” she said. “It would affect anyone … To help, they offer us wellness coaches. These people mean really well, but they are not doctors. They suggest karaoke and painting, but frankly, one does not always feel like singing, after having seen someone be battered to bits.”

The letter asks that the company give moderators regular access to clinical psychiatrists and psychologists. “Imagine watching hours of violent content or children abuse online as part of your day to day work,” they write. “You cannot be left unscathed. This job must not cost us our mental health.” -The Verge

Horror stories from Facebook’s PTSD-stricken content moderators are nothing new – as anonymous mods have leaked several times in the past, or had conditions revealed via lawsuits. In 2018, a California woman sued the social media giant after she was “exposed to highly toxic, unsafe, and injurious content during her employment as a content moderator at Facebook.”

Selena Scola moderated content for Facebook as an employee of contractor Pro Unlimited, Inc. between June 2017 and March of this year, according to her complaint.

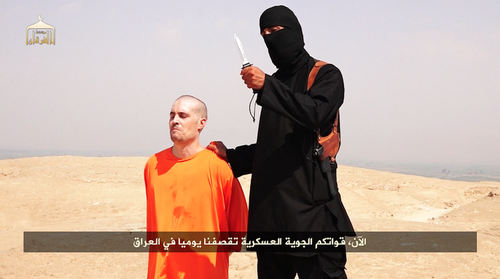

“Every day, Facebook users post millions of videos, images, and livestreamed broadcasts of child sexual abuse, rape, torture, bestiality, beheadings, suicide, and murder,” the lawsuit reads. “To maintain a sanitized platform, maximize its already vast profits, and cultivate its public image, Facebook relies on people like Ms. Scola known as content moderators to view those posts and remove any that violate the corporations terms of use.“

As a result of having to review said content, Scola says she “developed and suffers from significant psychological trauma and post-traumatic stress disorder (PTSD)” – however she does not detail the specific imagery she was exposed to for fear of Facebook enforcing a non-disclosure agreement (NDA) she signed.

“You’d go into work at 9am every morning, turn on your computer and watch someone have their head cut off. Every day, every minute, thats what you see. Heads being cut off,” another content moderator told the Guardian at the time.

Moderators also want to be official Facebook employees – not held at arm’s length through contractors where they don’t receive the same pay or benefits as full-time Facebook moderators.

“Facebook content moderators worldwide work grueling shifts wading through a never-ending flood of the worst material on the internet,” wrote Foxglove director Martha Dark in a statement. “Yet, moderators don’t get proper, meaningful, clinical long term mental health support, they have to sign highly restrictive NDAs to keep them quiet about what they’ve seen and the vast majority of the workforce are employed through outsourcing companies where they don’t receive anywhere near the same support and benefits Facebook gives its own staff.”

Facebook pushed back against the moderators, saying in a statement: “We recognize that reviewing content can be a difficult job, which is why we work with partners who support their employees through training and psychological support when working with challenging content,” a spokesperson said. “In Ireland, this includes 24/7 on-site support with trained practitioners, an on-call service, and access to private healthcare from the first day of employment. We also use technology to limit their exposure to graphic material as much as possible.”